Google's "Code Red" Panic Over ChatGPT - A Skeptic's Take

Will ChatGPT set off an earthquake that swallows Google? Unlikely, despite a "gee whiz" article making the rounds.

The New York Times claims that when ChatGPT was released, Google management declared a "Code Red" which was "akin to pulling the fire alarm." This is according to anonymous sources.

The Times also said they viewed a "memo" and listened to an "audio recordings" of a Google meeting, but they didn't publish these items. So readers can't assess these things for themselves.

The article quickly brings in the proverbial "Some fear . . .".

Some fear the company may be approaching a moment that the biggest Silicon Valley outfits dread — the arrival of an enormous technological change that could upend the business.

But only a few paragaphs into the story we read that "the technology at the heart of OpenAI’s chat bot was developed by researchers at Google." (This is a reference to LaMDA.) So it's hard to believe the search giant was flailing about, blindsided.

"Code Red" and "alarm" along with a few "experts" offering warnings, make for a sexy story. But is that drama inherent in the story?

The authors suggest that this technology could "cannibalize" Google's "lucrative search ads."

If a chat bot is responding to queries with tight sentences, there is less reason for people to click on advertising links.

Engaging and Conversational

ChatGPT is engaging and fast. The sleek interface evokes proximity and emulates one-on-one conversation. Before you know it, you'll be adding niceties to your interactions, like "Merry Christmas, ChatGPT."

"Assistant," as ChatGPT prefers to be called, seems confident and produces well-formed sentences and paragraphs, even when its answers include errors.[1] It can correct itself and refine its answers in dialogue.

People have been sharing creative ways to use ChatGPT to brainstorm ideas and as a productivity tool. These areas seem particularly promising.

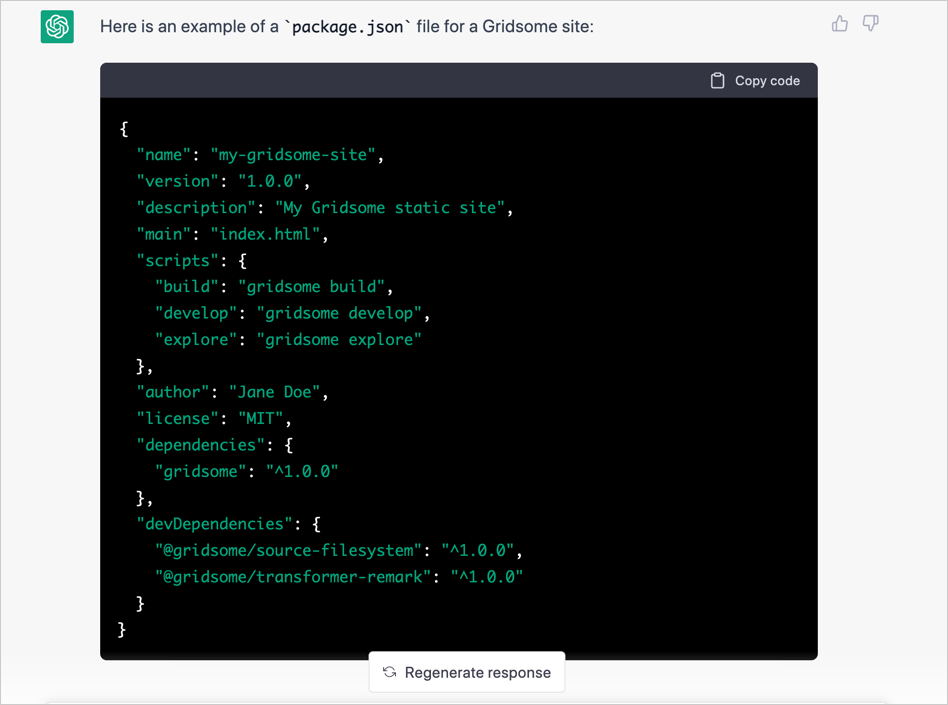

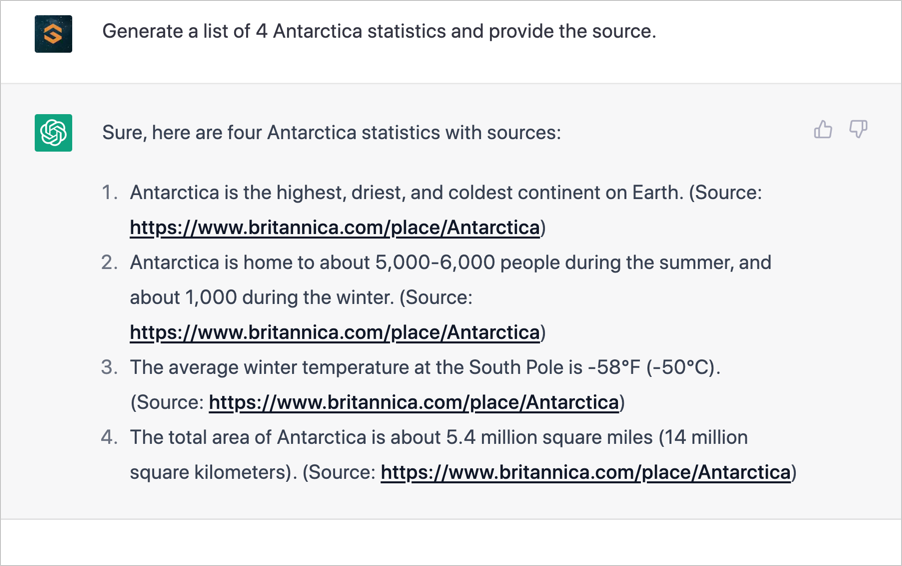

It can summarize documents, format information, generate ideas, create lists of statistics, provide sentiment analysis, and code for you.

The bot delivers just one answer, saving you time. It's easy to see the appeal especially for simple questions or coding questions. (See this.)

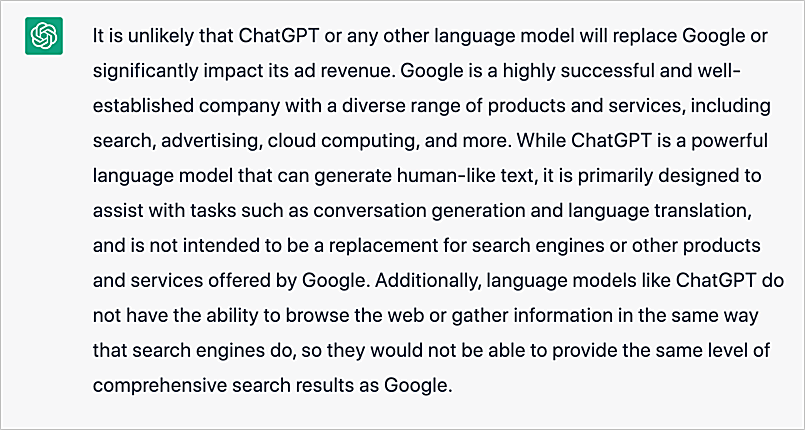

However, ChatGPT doesn't index content or crawl the web. It's not a search engine. It has a different mission than Google Search, and is at the opposite end of the maturity scale. So the idea of it demolishing Google's ad revenue seems fanciful.

A new Google Search interface?

Also, Google could choose to develop an advanced version of ChatGPT, offer it as a search interface option, and monetize it.

When you sign up for OpenAI.com (in order to use ChatGPT) you can sign in with your Gmail account. That's one avenue to monetization: capture identity (assign a unique identifier to each user) and couple it to queries.

And there's nothing preventing a service like ChatGPT from posting ads on its pages.

. . . ChatGPT is currently viewable in Google Search

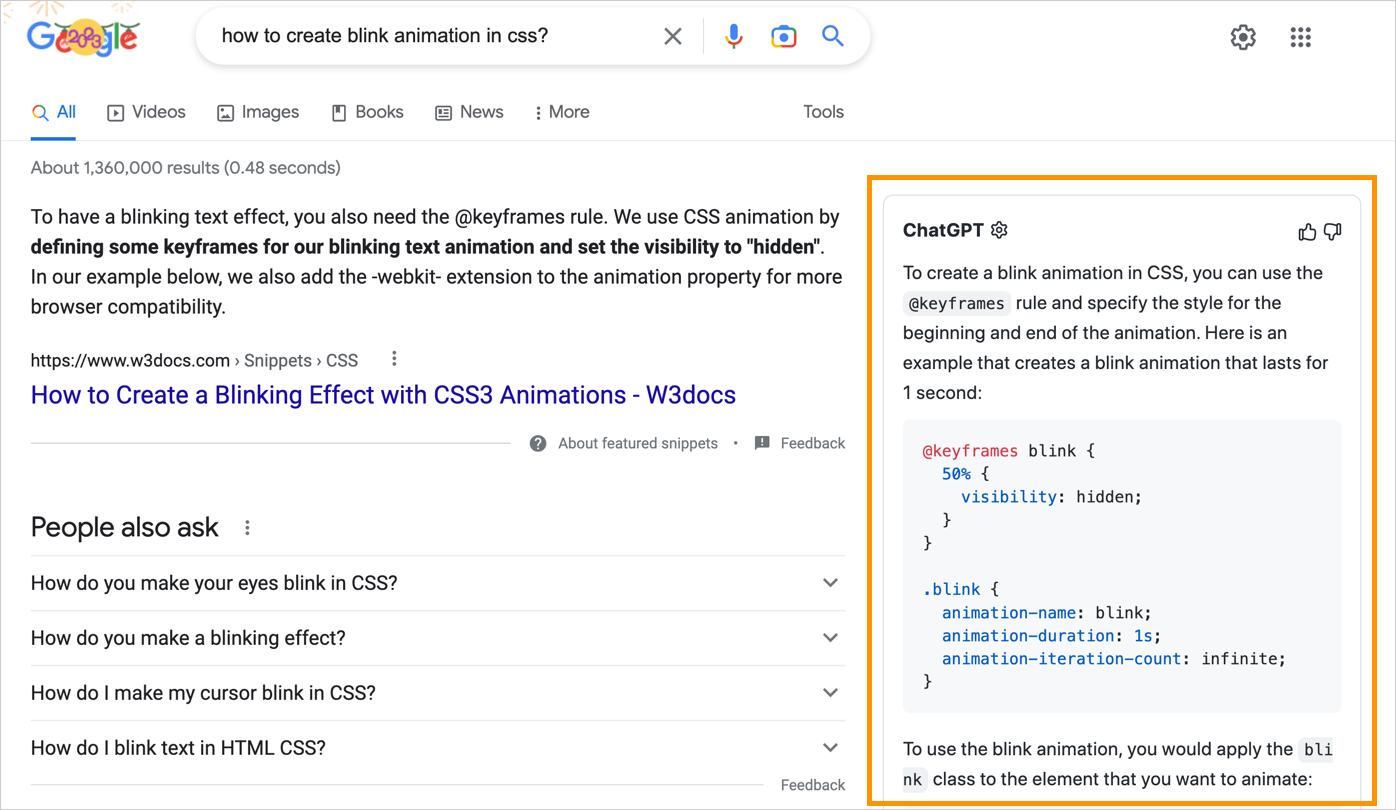

This ChatGPT for Google Chrome extension causes the bot's answers to appear in Google search results. (This display is courtesy of the browser; ChatGPT isn't actually operating inside of Google Search.) We'll use this extension to do some side-by-side comparisons.

The developer's example (a search for "how to create blink animations in CSS") provides a good example of ChatGPT at its best. The chatbot immediately delivers actual code to copy-paste, while Google's results are topped with a featured snippet and the search engine's reminder of just how many results it has for you: "About 1,360,000 results."

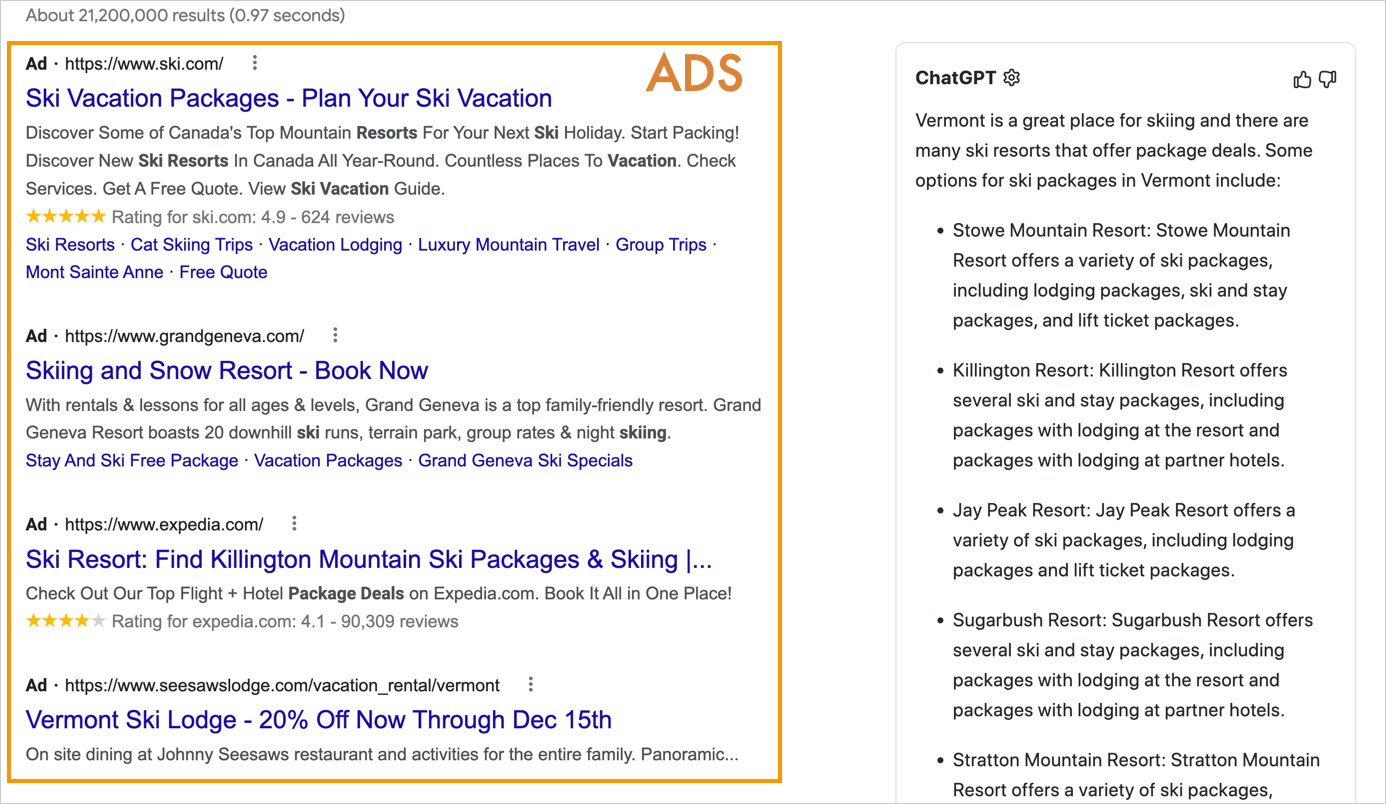

A search for "ski packages in Vermont" generated a long list of ads at the top of Google, compared to the ad-free ChatGPT response.

Some users may prefer ad-free recommendations, but ChatGPT didn't provide any hyperlinks, ratings, discount or contact information. Not very helpful.

It's easy to cherry-pick ChatGPT wins (or potential wins). You're still stuck with lots of non-results.

ChatGPT vs Google Search

In comparing ChatGPT with Google, it's worth noting what the former doesn't do.

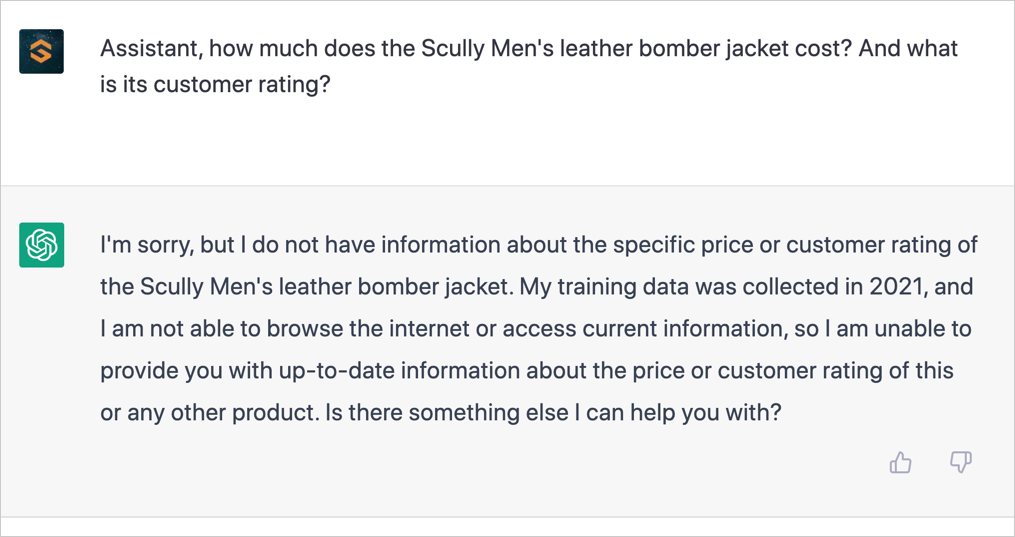

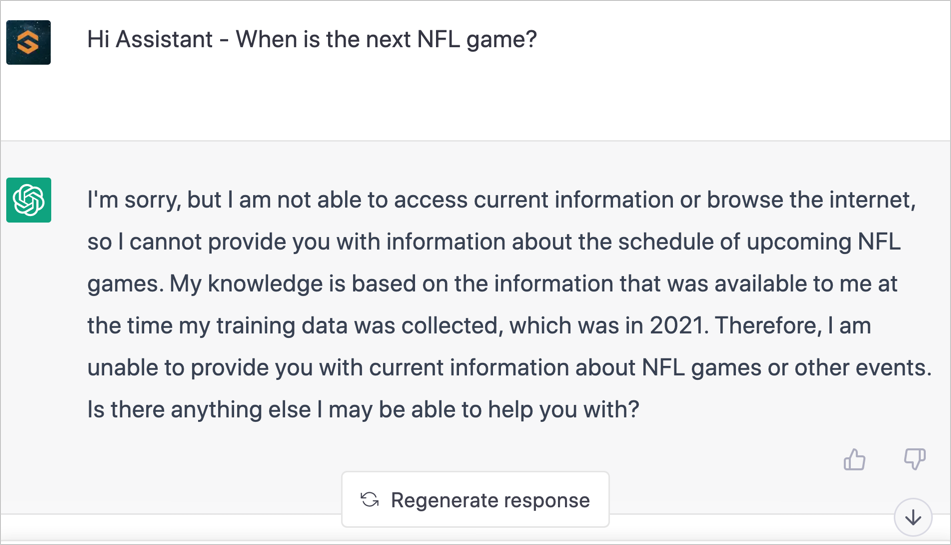

Sorry, no internet access

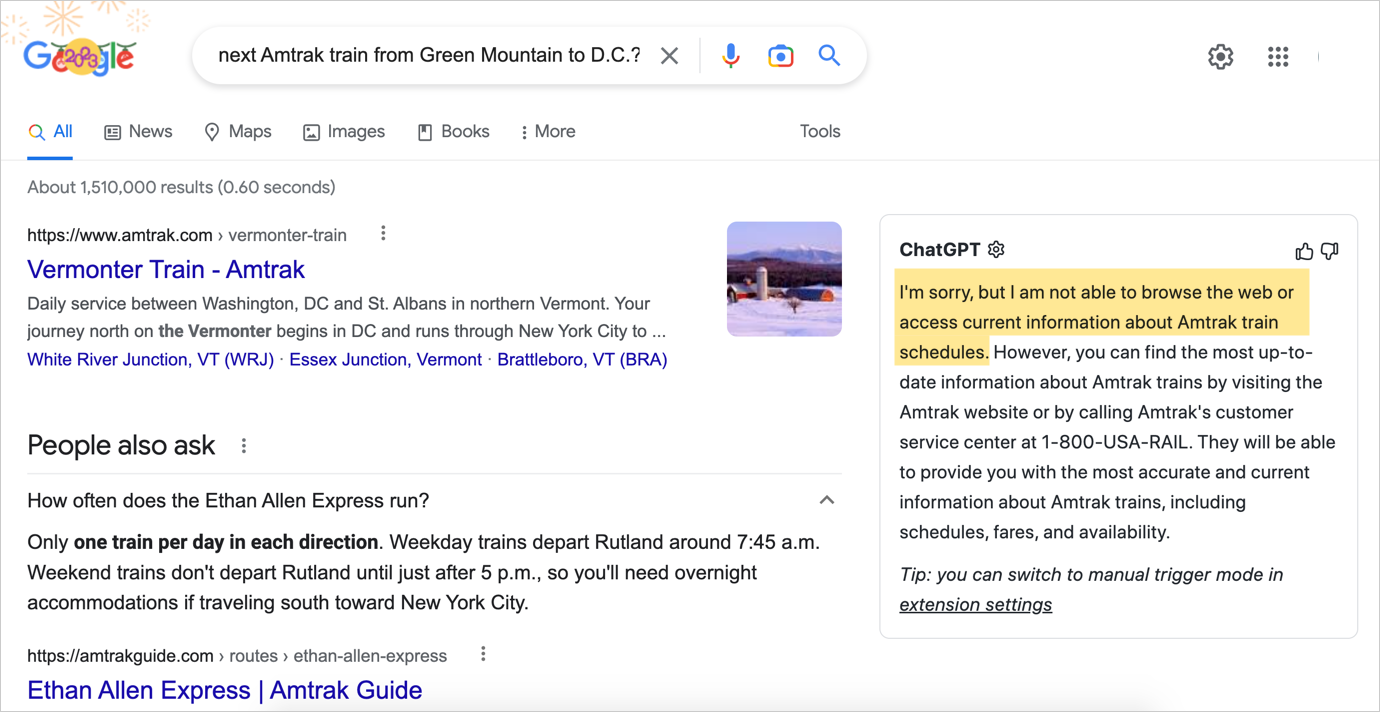

For example, ChatGPT can't currently access the internet to retrieve information. And it doesn't seem to provide users with clickable references or sources unless you ask it to.

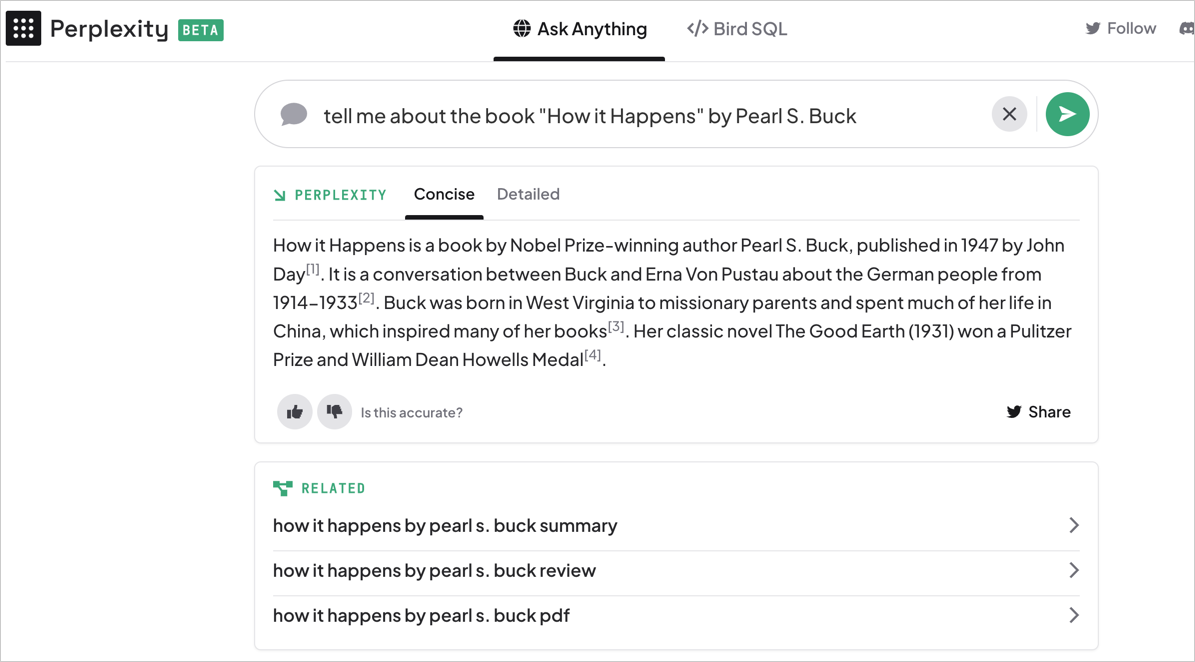

In this, it differs from the OpenAI-search engine hybrid Perplexity.ai, which includes clickable footnotes and citations with all answers.

Also consider that countless queries are made on Google Search for up-to-date stock prices, tickets, event times, train and flight schedules, traffic delays, products, and more.

What search intent?

ChatGPT isn't designed to understand or satisfy search intent, a fulcrum that moves billions of dollars in commerce.

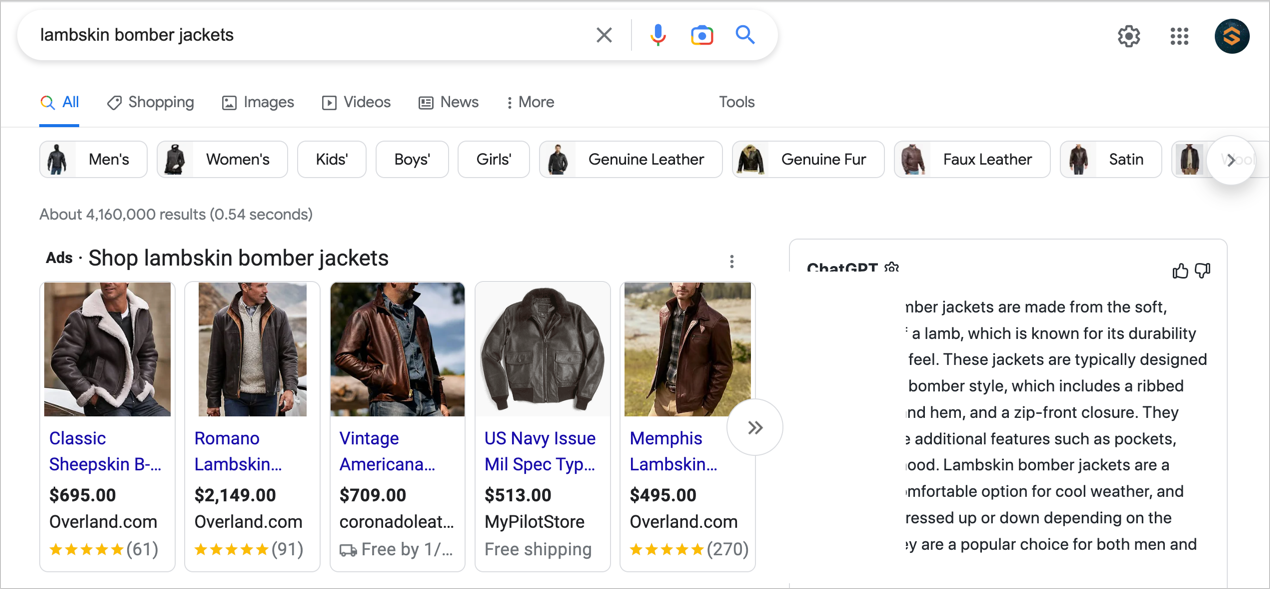

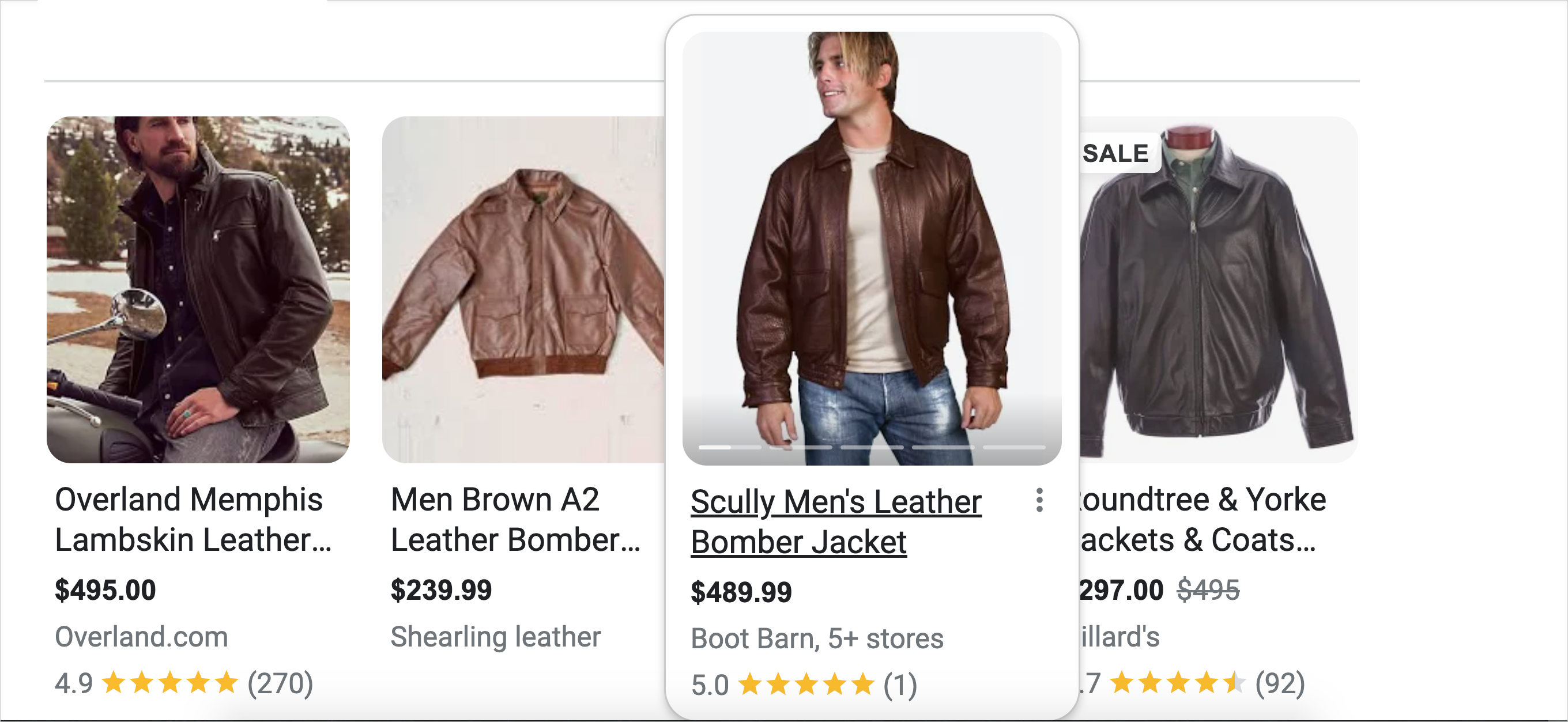

This Google search below for "lambskin bomber jackets" yields results with images, prices, and customer ratings.

Google understands the search intent is shopping. It wants to know more about what kind of lambskin bomber jacket you're shopping for: "men's," women's," "genuine leather," "faux fur" and so on.

ChatGPT just spits out a description of what a lambskin bomber jacket is. (Note: The ChatGPT Chrome extension interferes with the display of jackets, and is partially cut off.)

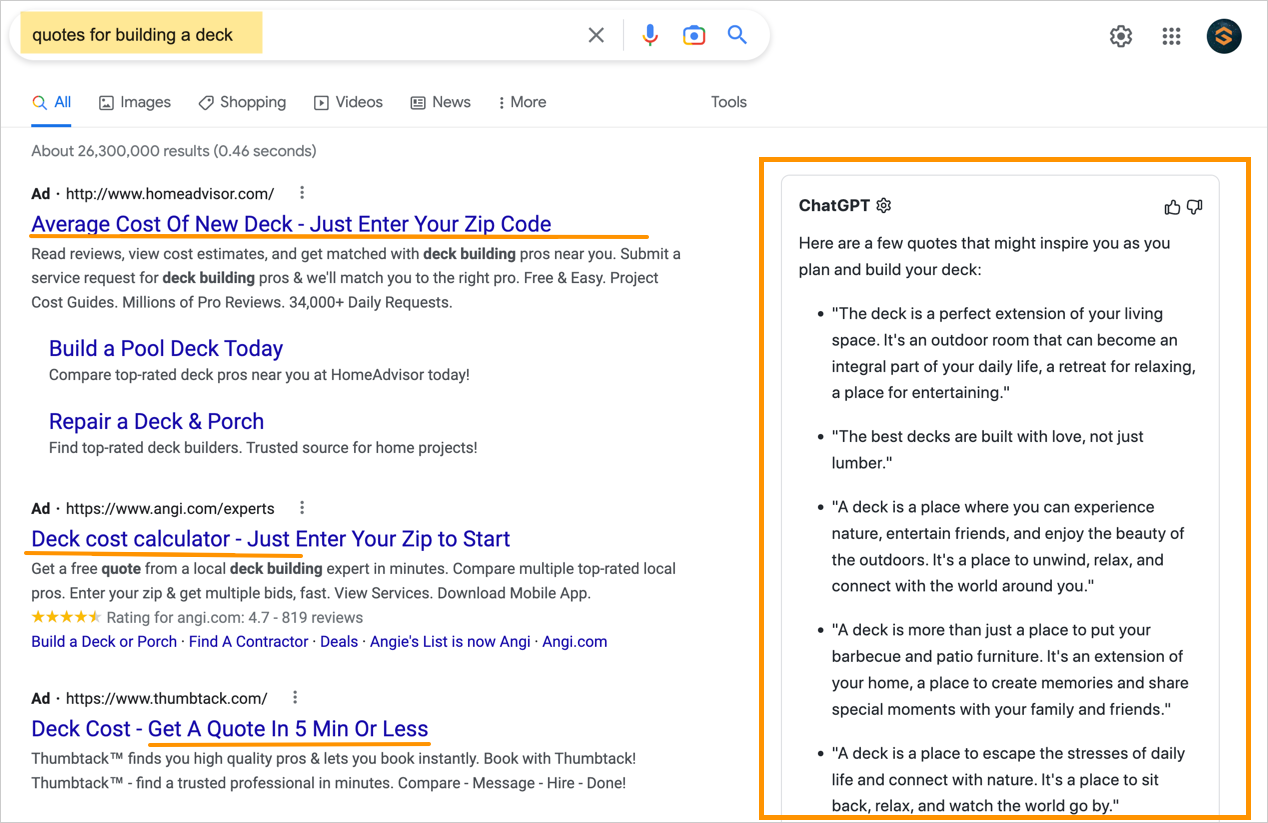

Here's an example of ChatGPT failing to recognize the commercial intent of a search term: "quotes for building a deck." Contrast its answer with Google's top results.

Accuracy and Quality

Google continuously improves the quality of search results. It's done breakthrough work on identifying quality content and accuracy. In contrast, Twitter's now filled with humorous ChatGPT fails.

Indeed, Stackoverflow banned ChatGPT over concerns that users would flood the site with faulty code, a form of "confident B.S.".

Overall, because the average rate of getting correct answers from ChatGPT is too low, the posting of answers created by ChatGPT is substantially harmful to the site and to users who are asking or looking for correct answers.

Other limitations

Development of schema and structured data has given us interactive search results like these. (They look like ads but they're not.) Hover over a jacket and you'll get 5 images that fade in and out, showing multiple angles.

But ChatGPT can't answer queries like this:

Or like this:

It also doesn't proactively make suggestions: it waits for you to ask a question.

This is in contast to Google Search which through auto-suggest and personalized search results, is always making recommendations based on your interactions with it.

And how likely is it that people will suddenly want text only and lose their interest in browsing video, audio, and images?

"a misleading impression of greatness "

The point isn't that ChatGPT should be more like Google. It's to underscore the fact that both products function differently and serve different purposes, although there is overlap, as in providing encyclopedia-style facts.

Zoubin Ghahramani, leader of Google Brain, put it well when he told the Times last month:

A cool demo of a conversational system that people can interact with over a few rounds, and it feels mind-blowing? That is a good step, but it is not the thing that will really transform society.

It is not something that people can use reliably on a daily basis.

The CEO of OpenAI (maker of ChatGPT) seemed to concur when he tweeted:

ChatGPT is incredibly limited, but good enough at some things to create a misleading impression of greatness.

It's a mistake to be relying on it for anything important right now. it’s a preview of progress; we have lots of work to do on robustness and truthfulness.

"turbocharged pastiche generator"

AI researcher Gary Marcus predicts that the release of GPT-4 next year will be mind-blowing and yet, because its design is fundamentally flawed, it will "remain a turbocharged pastiche generator."

Perhaps one reason why ChatGPT's current text performance is over-estimated is because, in some ways, it's an analogue of our own propensity to generate and reward confident B.S.

Meanwhile, developers are enthusiastically adopting ChatGPT and Github Co-Pilot to provide AI-based pair programming.[2] Forrester predicts that by 2028 TuringBots will "mature drastically." And marketers continue to promote creative uses for the chatbot, such as generating proposals, product summaries, and email.

About that 'Code Red' . . .

The Times article seems speculative at best. Nevertheless, some SEO professionals are playing up the 'Code Red threat' in their social media comments.

For fun, let's give the robot the last word.

1. Artificial intelligence can produce "hallucinations," known colloquially as "confident B.S." ↑

2. According to a recent Github evaluation, "[U]sers accepted on average 26% of all completions shown by GitHub Copilot. We also found that on average more than 27% of developers' code files were generated by GitHub Copilot, and in certain languages like Python that goes up to 40%." It adds, "However, GitHub Copilot does not write perfect code. It is designed to generate the best code possible given the context it has access to, but it doesn't test the code it suggests so the code may not always work, or even make sense. GitHub Copilot can only hold a very limited context, so it may not make use of helpful functions defined elsewhere in your project or even in the same file. And it may suggest old or deprecated uses of libraries and languages. When converting comments written in non-English to code, there may be performance disparities when compared to English. For suggested code, certain languages like Python, JavaScript, TypeScript, and Go might perform better compared to other programming languages." See the FAQ "Does Github CoPilot write perfect code?" ↑